In January 2020, I began a very pleasant internship at NASA Jet Propulsion Laboratory, studying graphics preprocessors and how to invoke parallelism with large terrain rendering tasks. As I had barely any background in either of these very interesting subjects, and needed to build a bridge between the two, I spent nearly every day going to the JPL library. That is, until the work-from-home order struck. So, this has been my Plague Reading List, Part 1.

Standard C++ IOStreams and locales : advanced programmer’s guide and reference

Earths of distant suns : how we find them, communicate with them, and maybe even travel there

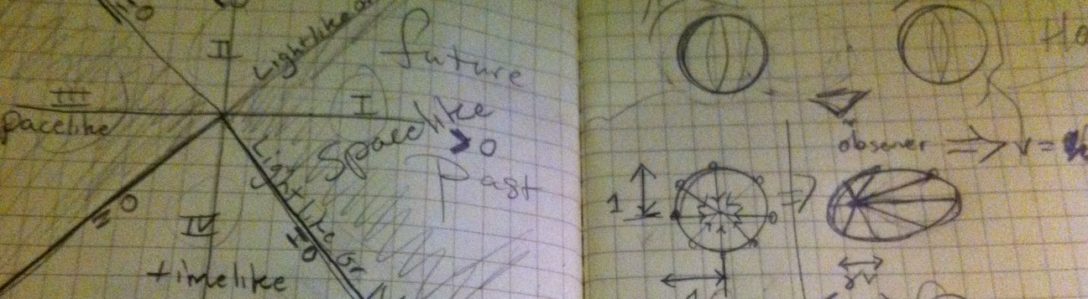

Introduction to applied nonlinear dynamical systems and chaos

Graph-based natural language processing and information retrieval

Explanatory nonmonotonic reasoning

Learning GNU Emacs

Computer systems : digital design, fundamentals of computer architecture and assembly language

Qualitative approaches for reasoning under uncertainty

MySQL reference manual : documentation from the source

Introduction to high performance computing for scientists and engineers

Data communications and networks : an engineering approach

Extraterrestrial intelligence

High performance scientific and engineering computing : hardware/software support

Regression modeling strategies : with applications to linear models, logistic regression, and survival analysis

Machine learning and systems engineering

Concrete abstract algebra : from numbers to Grobner bases

Nets, terms and formulas : three views of concurrent processes and their relationship

Mathematical foundations of computer science

Categorical data analysis

Matching theory

Data integration blueprint and modeling : techniques for a scalable and sustainable architecture

Parameterized complexity

High performance computing : paradigm and infrastructure

TCP/IP protocol suite

Large-scale C++ software design

Encountering life in the universe : ethical foundations and social implications of astrobiology

Other minds : the octopus, the sea, and the deep origins of consciousness

Modern C++ design : generic programming and design patterns applied

Reverse engineering of object oriented code

Software abstractions : logic, language and analysis

Extraterrestrial languages

Algorithms and theory of computation handbook

Secure programming cookbook for C and C++

Archaeology, anthropology, and interstellar communication

Semiparametric theory and missing data

The pocket handbook of image processing algorithms in C

Design of experiments : ranking and selection : essays in honor of Robert E. Bechhofer

Designing digital systems with SystemVerilog

C++ network programming

Intelligent control and computer engineering

Operations research : an introduction

Logic-based methods for optimization : combining optimization and constraint satisfaction

Applied combinatorial mathematics.

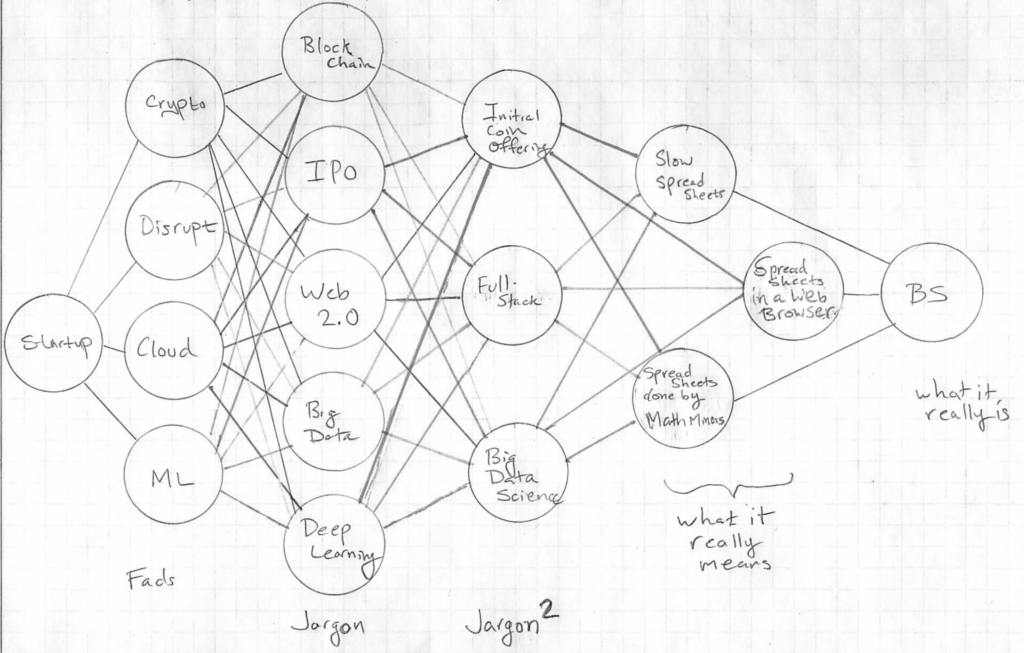

Visual complexity : mapping patterns of information

Dawn of the new everything : encounters with reality and virtual reality

Handbook of logic and language

Recurrent neural networks : design and applications

Handbook of computational methods for integration

Data structure programming : with the standard template library in C++

If the universe is teeming with aliens … where is everybody? : fifty solutions to the Fermi paradox and the problem of extraterrestrial life

The computational beauty of nature : computer explorations of fractals, chaos, complex systems, and adaptation